AI Helping Robots Understand Our Language

Artificial intelligence (AI) is one of the most complex technology fields, in part because it’s constantly evolving and adapting to industry needs. The use of AI builds the complexity even further due to the necessity of understanding human language. AI and robots mimic people’s behavior and thoughts in various ways.

Together, they have the potential to hold conversations, multitask and translate. While AI has already helped robots reach new heights, there are still some improvements to make their combination more effective and evolved.

NLP and Deep Learning

Natural language processing (NLP) and deep learning are the reasons robots can comprehend language. On smaller and more subtle levels, a chatbot will use one of these processes to adapt to consumers’ inquiries and solve them accordingly. Over time, the chatbot develops and learns more.

However, NLP and deep learning take different approaches. Historically, NLP has relied on coding and machine learning algorithms to help AI understand human language. Newer software may be code-free, though, so NLP is by no means obsolete.

Deep learning takes that foundation and expands upon it for robots. It uses neural networks to mimic the human brain, constantly learning based on new stimuli and information. Though this pairing is undeniably what makes AI so powerful, specialists in the field must focus on eliminating latency and inaccuracies while building trust. The development of Ai and technology has already offered simplification in every day life as well as cost savings.

Since the human brain is so complex, AI will not be able to completely replicate it or respond to people any time soon. However, some innovations are making strides.

Recent AI Developments for Robots

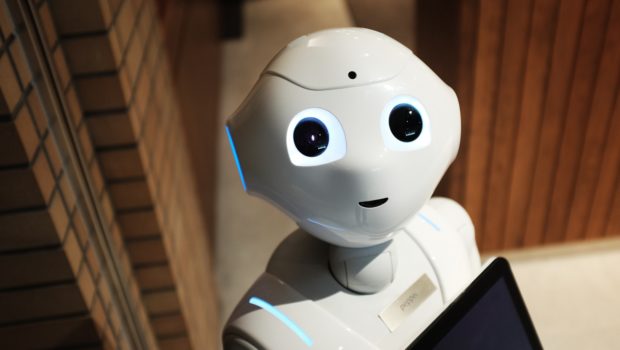

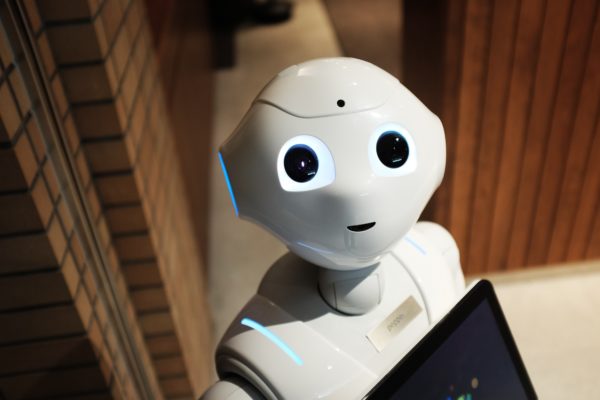

A few years ago, complex language processing was still coming into use. Now, it’s a common feature for different robotics systems throughout the world. Pepper and NAO are two robot examples that consumers can buy and program to their liking, whether it’s retail work or education.

A team from the Edinburgh Centre for Robotics has developed a new system for language processing. Multimodal Intelligence inteRactIon for Autonomous systeMs (MIRIAM) comes from the Heriot-Watt University and the University of Edinburgh. It lets workers and robots communicate with each other by asking and answering questions, giving explanations and understanding behavior.

This step forward will be invaluable for industry work of all kinds since robotic systems are necessary for jobs from underwater observation and exploration to taking inventory in warehouses. Workers in these fields can guide robots to complete tasks efficiently, all through speaking the same language.

Elsewhere, MIT researchers are working on a new way to train AI to learn languages. This method is actually exceedingly close to how children learn to speak — through observation. AI systems watch videos with captions, understanding the actions and words that go together. Robots then get a better understanding of how to respond to commands and questions correctly and without latency.

Areas to Improve

AI has some obstacles to work through before things can move forward. AI must have creative, out-of-the-box thinking, a better understanding of the context in a conversation and the ability to fill in the missing pieces on its own. Developers must accomplish these tasks while ensuring AI is as sustainable as possible yet practical.

While AI professionals try to create better systems for robots, one of the biggest issues is earning the public’s trust. With big tech companies under federal investigation and the ever-present distrust of robots in general, AI must strengthen its reputation. To do so, developers and manufacturers need to provide more transparency about how robots use data.

Based on MIT’s and Edinburgh researchers’ progress, robots could learn from humans more efficiently by understanding the language. They’ll then be better able to respond. Eventually, they may even be able to teach other robots using these same methods.

The Next Big Task

Once the industry tackles the current obstacles, AI can use NLP and deep learning to have robots properly talk to humans as if it were a natural conversation. Eventually, robots must also be able to speak and translate multiple languages. It’s only a matter of time before that step becomes a reality.