Here’s how you should approach A/B testing for marketing

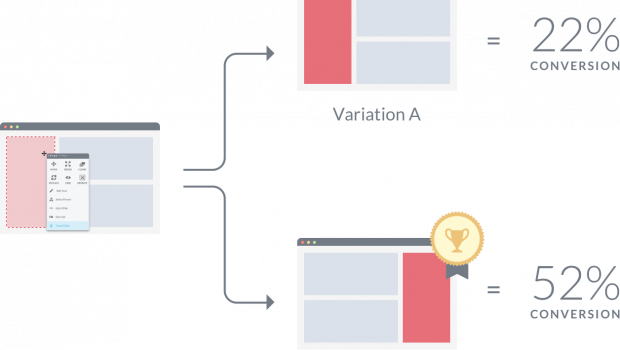

The most effective way to optimize your marketing campaign is to test and experiment. It allows you to reverse engineer an optimized and effective campaign from the results you get.

A/B testing is now a commonly used way to test out variables, especially for email marketing. It can help you get even the seemingly small details such as length of the subject line, right which could, in turn, have a great impact on your results.

Here are some rules or best practices to keep in mind when split testing your campaigns:

- Kick off with a hypothesis

A hypothesis is nothing but the ultimate objective of the test; What it does is, it gives a direction to your A/B testing process.

Simply put, it is nothing but a statement that you are aiming to prove or disprove using the split testing process. Pen down the hypothesis – as basic as this may seem, it will give a sense of clarity and help you better identify the variables and the result metrics while conducting the test.

For instance, consider the following hypothesis ‘Having a quote or a statement from a customer on the landing pages will boost the sign ups’. Now, you know the idea you are testing, you know what the variable is (having a customer quote on the landing page, in this case) and you know the result metrics you should follow (number of signups, in this case).

- Make sure to test only one parameter per test

Keep in mind that in one A/b test you can only test one variable. In order to be able to draw conclusive results which you can turn into actionable takeaways, you must ensure that your test is focused on only one variable.

It’s a very obvious logic. If you test 3-4 variables per every split test, you wouldn’t know to which single or multiple variables you should attribute the results – corrupting the whole point of the test.

For instance, let’s assume you want to test the length of the subject line for your email marketing newsletter. Come up with two subject lines (a short one and a long one) that you think will be most impactful. Keeping other aspects such as body of the email, CTAs, preheaders etc. constant, divide your email list into two sets and use one subject line for each set. The metric you will be using to measure the results is the email open rate.

This way, you will be able to reliably draw conclusions about the most effective length of a subject line.

- Have a clear idea about your success parameters

Even before you get started on the test, decide how you are going to measure the success or the failure of a test statement; clearly identify what key metrics you are going to follow to measure the results of the test.

Remember that the success metric you choose should be relevant and directly dependent on the variable you want to test, maybe a strong linear relationship. For example, you should use email open rates and not click-through rates to test email subject lines.

Let’s say you want to increase the conversion rate of your home page. One hypothesis you want to test is to include snippets of customer testimonials to improve the conversion rate. In this scenario, the variable will be the inclusion of testimonial snippets and the result metric will be the conversion rate.

The above example is pretty straight forward, but there is definitely scope for error when multiple metrics are involved.

A common blunder most A/B testers make – they keep track of multiple metrics and later choose which metric they want to rely on. This definitely leads to confusion and indecisiveness.

A more pragmatic way is to identify the variable and the result metric at the start of the test and stick to it.

- Ensure that the sample selected is sufficiently random

Unknowingly, you sometimes introduce random variables into your sample. This occurs when the sample you created is not random enough.

For instance, you created a sample in which you unwittingly selected mostly Asians; Now, without really intending to or realizing it, you have created a demographic variable in the test sample.

To minimize the probability of such inadvertent variables, ensure that your sample selection process is as random as possible. One idea is to use random computer-generated numbers to select the contacts from your email list or you can even choose alphabetically.

The important thing is to make sure that the sample must represent the randomness present in the pool of your customers.

The bottom line is that your sample selection process must be such that every person on the list has the same probability of getting selected. Also, before using the sample, scan the data to see if there are any unseen variables.

- Make sure to not skimp on the documentation

Another mistake that most marketers make when A/B testing – ignoring documentation. You should take the time to record your results and observations, more so if you are someone who frequently uses split testing to test out a number of factors.

This way, you won’t make the mistake of repeating your tests. This will help you build on your past lessons and findings from these tests.

Also, it is much easier to explain your findings to your successors and new team members, when you have it neatly recorded and are presentable. Even in your absence, your team can see and understand the test results for themselves.

In fact, you can consider using tools with features such as email notes and reminders to make a habit out of documenting your findings and insights on a constant basis. You can compile all these notes into a document later on.

If you have no problem publishing and sharing your test results, you can also create a long blog post including all your findings/insights and publish it on your company’s blog.

Wrapping up

Finally, don’t forget that although A/B testing is a powerful technique to optimize your marketing campaigns, amongst other things, overusing it is definitely not advisable.

It really doesn’t make sense to test any and every small aspect. You must also learn to draw conclusions based on experts’ suggestions on the web and your common sense. The point is, know when the ROI of you split testing is not really paying off. Also, using the right tools can make your job a lot easier.

![The basics of writing a professional email [Infographic]](https://technofaq.org/wp-content/uploads/2017/03/Email-etiquette-guide-150x150.png)