CGI Isn’t Getting Better, So Why Are Movies Still Using It?

We’ve all seen a movie with computer-generated imagery, also known as CGI. In some cases, the results are mind-blowing — in a good way. However, more and more of us are starting to turn on CGI for one crucial reason: It’s not getting any better.

What Is CGI?

A wide range of industries rely on computer-generated imagery to do their jobs. Everyone from architects to visual artists to engineers to game designers use this method to crystallize their ideas or to fully bring them to life.

There are multiple ways to incorporate CGI into a project, too. For instance, ray tracing allows designers to generate an image that appears three-dimensional, as they can create light and shade as it would fall upon a certain shape.

In the long history of computer graphics, CGI came relatively late in the game. The first graphic images appeared in 1950, thanks to Ben Laposky.

Thirteen years later, the computer mouse came to be, a vital tool in creating such images on a computer. Then, three decades after that came the world’s first web browser, called Mosaic. And that creation led the way for what happened in 1995 — the first fully CGI movie came out in that year.

Why Isn’t CGI Improving?

It’s not necessarily accurate to say CGI hasn’t improved at all, but more that filmmakers are relying on it too heavily. Indeed, when CGI first appeared in movies, it didn’t have the seemingly limitless applications it has today. As such, filmmakers used it sparsely, a little bit of seasoning in a mostly live-action feature.

Nowadays, though, CGI has become the primary medium of storytelling for some movies. Instead of being a unique tool that makes certain moments feel more real or more spectacular, film studios are creating full-length features with CGI. That can only work in a handful of scenarios — the rest of the time, the audience doesn’t like it.

Another reason why CGI has lost its flair — it can’t keep up with the picture quality we’re getting. Filmmakers used to use CGI exclusively in settings where it wouldn’t be so visually apparent. Rainy, dark scenes? Perfect for CGI! Back then, too, it didn’t stand out quite as much, considering screen quality wasn’t nearly what it is today.

So, partner the fact that we have crystal-clear TVs in our homes with extensive usage of CGI in scenes both dark and bright and you can see the problem — literally. It just doesn’t look as good as it did before.

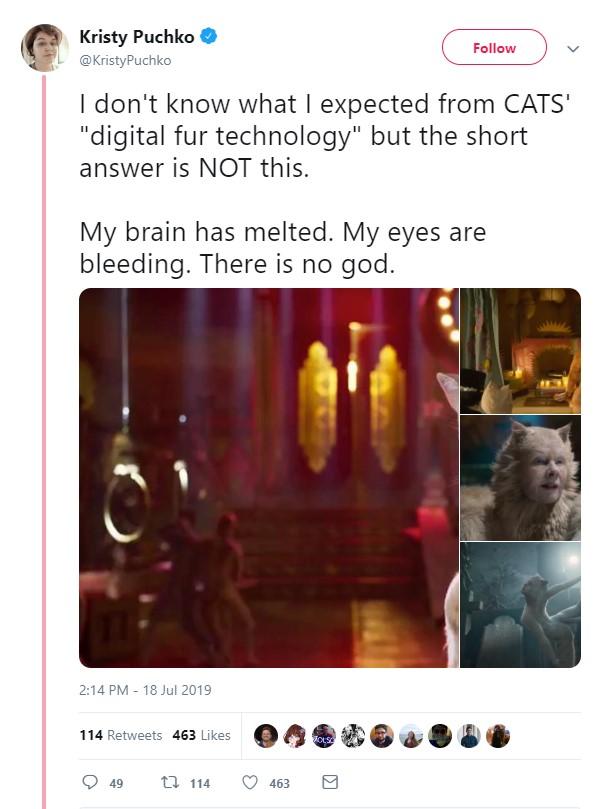

Even trailers for such CGI-heavy stories have proven to be turn-offs from today’s audiences. Just look at the recent teaser release from Universal for its upcoming musical, Cats. Many who watched the trailer did so in horror of the way every actor had been so heavily CGI-ed. They stand on two feet, but fur covers them from head to toe. They have cat ears, but human faces. Everything about it seemed overdone.

Twitter user Kristy Puchko wrote, “I don’t know what I expected from Cats ‘digital fur technology,’ but the short answer is NOT this. My brain has melted. My eyes are bleeding. There is no god.” Even in its exaggeration, Puchko’s tweet reveals what many audience members feel — tired of so much CGI in so many movies, especially in those where it loses its grip on reality.

Does Anyone Do It Well?

Once upon a time, audiences and critics alike heralded CGI as a great method for making the impossible seem possible. Think of classics like Jurassic Park and Terminator 2. These two movies paved the way for CGI usage today because they were so visually stunning.

Indeed, some more recent movies have masterfully incorporated CGI into stunning visuals. For instance, Christopher Nolan, who helmed the Batman series starring Christian Bale, seems to know how to sprinkle in just enough digital imagery. He only uses CGI when he has to — either when he can’t afford to create and film something real, or when his vision is an impossible reality. He seems to have figured out what moviegoers think, and what many other filmmakers have failed to realize. He has said, “However sophisticated your computer-generated imagery is, if it’s been created from no physical elements and you haven’t shot anything, it’s going to feel like animation.”

To that end, the aforementioned Jurassic Park series has always done a great job of combining its real and digital moments. You’re probably wondering how a movie about dinosaurs reappearing in the present day could have real elements. The movies always have a combination of animatronic creatures for close-ups, relying on CGI for wider shots where the physical dinosaurs wouldn’t look as convincing.

But we’re here to talk about the majority of CGI-infused movies that take things too far. Why don’t we like them?

Why Don’t We Like CGI?

One significant reason humans don’t like the practice has a name — the uncanny valley. This term describes CGI and other aesthetics that supposedly resemble a human’s face, but are slightly off-putting. We don’t like to see toys, robots, CGI images or anything else that imperfectly resembles a human. It makes us feel, as the name of the phenomenon puts it, uncanny. We can start to get an eerie sense, or perhaps one of complete disgust.

Now, the “valley” part of this phrase is because we respond positively to humanoids that don’t look like us. We like replications that look slightly more human, too. But the middle ground of somewhat-similar-to-human creation? That’s the uncanny valley, and that’s where we’re unhappy. Unfortunately, many CGI characters land in this spot, which is why the digitized people can put us off.

Of course, every audience member is different, and further reasons for disliking CGI will be preference-based. But common complaints include the fact that too many movies rely too much on the technology — too much tech, too little reality upon which to ground the film.

So… What Can We Do?

It’s hard to say what the future of CGI will be — filmmakers cling to it, while moviegoers have started to groan about its constant usage. Some of those complaints have impacted the industry.

For example, the much-anticipated trailer for the Sonic the Hedgehog movie dropped on April 30, 2019, but the resulting teaser did not delight fans. Instead, many people voiced complaints about the beloved character’s new look.

In the case of Sonic, these and other opinions carried serious weight with the flick’s director, Jeff Fowler. He took to Twitter, too, to assure audiences he would be changing the titular character’s look before the film’s release, as per viewers’ requests.

Of course, not every director will be this receptive, nor will it be as obvious that a movie needs a CGI reconsideration before it hits theaters. But there is one way to demand that more filmmakers step it up and return to the just-enough CGI era in which we once lived — support movies and directors who do it well.

If their style makes money, others might take note. Then, we could get back to realistically fantastic filmmaking, just the way we like it.