How To Automate Your Search By Building A Web Crawler From Scratch

Fact: Google provides us with all the information we need via a simple search. Thanks to the advent of the internet, we can get most of our needs (at least informational ones) fulfilled online. The beauty of all this is that the information available online is ever expanding and is being added to daily. In today’s digital age, data is key.

Data is the digital currency of the online world. People understand this and businesses understand this as well. According to a study by Accenture, 83% of enterprise executives have pursued big data projects to gain a competitive advantage. There are stats that are in favor of collecting and assessing data.

The more we interact with the internet, the more data is generated. You can use the data to analyze trends, competitors and more. The need to collect and assess data has led to the development of the field of data science. More programs are being made to assist individuals and enterprises to collect, categorize and structure the data available online.

Data collection isn’t only for businesses and developers, you as a normal person can also perform simple data collection. Say you visit an e-commerce shop and would like to know all the prices of a certain product; you can use a web scraper to let you know this.

We can imagine the look of confusion on your face, let’s just have a quick look at what a web scraper is.

What is a web scraper?

The literal definition of a web scraper is that it’s a tool used to scrape information off of websites online. For example, you want to know the prices for products in a certain category in an e-commerce shop. You can use a web scraping tool to collect and structure this data.

There are some minimum requirements you’ll need to meet for the tool to work successfully, but we’ll look at that later.

If we define a web scraper, a bit more in depth, then it’s a tool (an internet bot) that indexes particular content on a website. It can be a script or a program written in a certain language with the aim of gathering data or information from a website.

Web Scrapers are normally placed in 4 structures which are:

General purpose web scrapers

These are the ones used by search engines and web providers. They require high-speed internet and substantial storage. The instructions these bots have is to scrape as many pages as it can from a set of URLs. It’s the best option if you’re looking to gather large sets of data/information.

Incremental web scrapers

These types of web scrapers are best used when you have already crawled a page and just need the new information updated on that page. The way these scrapers work is that they only crawl incremental data on a set URL.

Take note that it doesn’t download the old information that was already crawled, it only adds to it. It’s a great option as it saves storage space and offers a quicker crawling time.

Focused web scrapers

These are scrapers that gather data on a certain URL based on a predefined set of instructions. It’s this type of scraper that we’re going to use for our use case on the e-commerce example. It’s also good because you don’t need a lot of internet speed or storage space to run a focused web scraper.

Deep web scrapers

If you want to crawl all the information on a particular web page, this type of scraper is the best option. There are some sites online that have content hidden from public view. This content can’t even be scraped by search engines. The deep web scrapers will gather all the data/information on the website even the ones blocked by search engines.

Web Scrapers are a useful tool that offers a great way to collect data and information from web pages in an easy and structured format. It allows us to quickly access information that would have been tedious to get. Either because you’d need to do a lot of searching or quite a bit of copy and paste.

Now that we know what a web scraper is, let’s have a look at some requirements for using a web scraper.

The requirements for using a web scraper

There are many web scrapers tools out there (more on this later), some complex than others. Some of these tools are quite simple. They are made for people that can’t code while other tools can be complex and some understanding of coding languages is required.

Overall, to use web scrapers properly and efficiently, there are some prerequisites you need to know:

HTML

Most websites use HTML in their formatting. In order to specify to the web crawler to gather certain data/information, you’ll need to provide the HTML tags. This is more so the case if you’re using Python (the language most recommended for beginners).

You’ll need an understanding of opening and closing tags, Class IDs and all the different elements in HTML.

The good news is HTML is easy to learn and understand. A simple visit to Khan Academy or freecodecamp will give you all the information you need. You can get your technical project reports or research papers done with custom paper writing services.

Python basics

You’ll also need to be aware of how to use Python. Python is one of the easiest programming languages to learn for a beginner and it’s known to make data visualization better. It has an easy to understand syntax when compared to other languages.

Other than that, you’ll also need to know how to create a virtual environment for your Python project. This helps avoid conflicts when dealing with multiple projects in Python.

Python libraries

You’ll also need to know how to install python libraries. This is done after the virtual environment is set up and activated. There is a bit of complexity involved in installing a python library.

JSON

You’ll also need to know how to save and parse data in JSON. JSON stands for JavaScript Object Notation.

Tools you can use for web scraping

As we said earlier, there are many tools that can be used for web scraping. Each tool meets the needs of a certain subset of users. Let’s have a look at some tools that can be used for web scraping. To make it easier, we have subdivided the tools based on the types of users.

1. For Developers

Scraper API

Sharon Blackwood, who works for domywriting review, says, ‘’This is quite the powerful scraping tool meant to be used by developers. It makes it easier for them to handle proxies with its pool of hundreds and thousands of proxies.’’

The tool also handles browsers and Captchas so that the developer can get all the raw HTML data that they need from a web page.

This tool can also automatically throttle requests to help you avoid IP bans and being detected by Captcha.

Cheerio

Another tool for developers, Cheerio has an HTML parsing library written entirely in NodeJS. It offers a variety of methods to extract HTML and its associated elements.

It’s quick and offers an API similar to JQuery. If you’re familiar with the language, you’ll feel right at home using this tool.

Beautiful Soup

This web scraping tool is suited for those developers familiar with the Python language. It offers an interface that makes it easier for Python developers to parse HTML.

What makes this tool special is that it has been around for some time now ( a decade). It’s time tested and contains a fair amount of documentation and tutorials online.

2. For Beginners

ParseHub

Parsehub is a popular tool used by Journalists and data scientists alike. This tool has a paid version, but also offers a generous free tier.

The tool makes it easy for anyone without coding knowledge to scrape web pages. The web scraper is powerful and simple to use at the same time. It offers features like automatic IP rotation and deep scraping.

In addition, it also allows you to scrape data via a click(s) on the elements that you’re interested in on a web page.

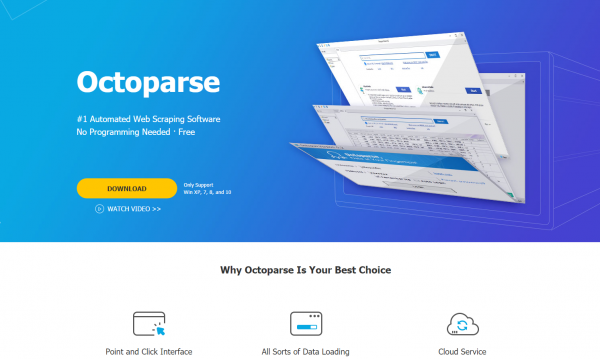

Octoparse

Here’s another tool that also allows users to scrape web content without needing to know how to code.

Like Parsehub, it’s also a paid tool with a generous free tier. It also allows users to scrape web content via use of a simple click interface. Better yet, it also has a cloud solution for those that want their scraping to stay online.

3. For Enterprise Users

Diffbot

Diffbot offers more of a solution for Enterprises rather than individuals. This scraping tool doesn’t even use HTML parsing. It uses computer vision.

This proprietary solution is used to identify information on a web page by using its visual aspects rather than the HTML code. The advantage of this is that the web scraper won’t break when the HTML code on the page is changed (as long as the page remains the same visually).

Mozenda

With amazing customer support, Mozenda is another enterprise solution to consider. The tool offers a cloud solution to web scraping and has scraped over 7 billion pages. This scraper tool is powerful and scalable as well. Overall, it makes a great solution for enterprise clients.

Automate Your Search using Octoparse

Let’s get back to our example of trying to scrape an e-commerce site to get the prices of a certain item on the site. For this scenario we’re going to use Octoparse. Let’s begin:

- At this point, we’ll assume you have Octoparse installed and setup. There are two modes that you can use, Wizard Mode and Advanced Mode.

Advanced mode is for users that are a bit more technical and meet the prerequisites stated above. On the other hand, Wizard mode offers the point and click interface that makes it easier to build a scraper.

- You’ll find a list of options after selecting Wizard mode. From here, you’ll select “create” under the “List and Detail Extraction.”

- After this, you’ll enter the URL from which you’d like to extract the data. In our case, it’d be the URL for the e-commerce store.

- Click on the items which you’d like scraped. In our case, it’d be a category say Shoes or a particular product. You’ll also select the price value on the site. Click “Next”.

- The next step would be to “Enable Pagination” especially if there are multiple product pages. Click on the “Next” button or “>>” on the site (depending on which is used on the site) in Octoparse. Select the number of pages you’d like to get parsed.

- Octoparse will take you to the page for you to select the values that you’d like to be extracted. In our scenario, it’d be “shoes” and “price”. Click “Next”.

- That’s it! Your web scraper is ready. Click “Local extraction” to start extracting data.

Conclusion

An understanding of HTML will take you a long way when trying to scrape web pages. There are some few things that you also need to keep in mind when scraping web pages. Some sites use cookies to block bots while others use the robots.txt to limit requests.

You also need to be careful about the number of requests you make. Too many requests could affect a site’s performance. A better way to do it will be to check if the site has a public API as it’ll make things a lot easier for you. All in all, automating your search by building a web crawler from scratch will ensure that you have data in your hands and you can use it the most user-friendly way.