What is GPU Computing

Computing on a graphics processor (GPU) is becoming increasingly popular today is not in doubt. However, this does not belittle the role of the central processor. Moreover, if we talk about the vast majority of user applications, then today their performance is completely and completely dependent on the performance of the CPU. That is, the vast majority of user applications do not use GPU computing.

In general, GPU computing is mainly performed on specialized HPC systems for scientific calculations. But user applications that use GPU computing can be counted on the fingers. It should be noted right away that the term “GPU computing” in this case is not quite correct and can be misleading. The fact is that if an application uses GPU cloud computing, this does not mean that the central processor is idle.

Computing on the GPU does not imply transferring the load from the central processor to the graphic one. As a rule, the central processor remains busy at the same time, and the use of the graphics processor, along with the central one, can improve productivity, that is, reduce the task execution time. Moreover, the GPU itself acts as a kind of coprocessor for the CPU,

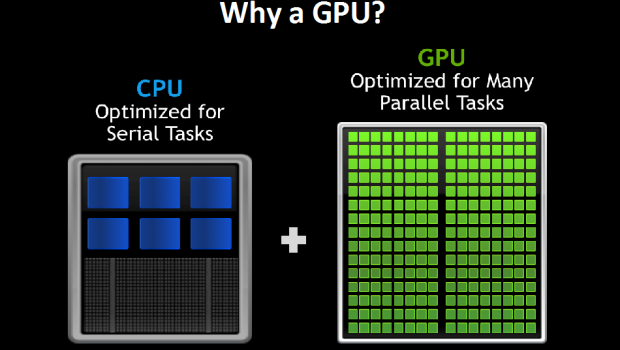

To understand why computing on the GPU is not a panacea and why it is incorrect to say that their computing capabilities are superior to the capabilities of the CPU, it is necessary to understand the difference between the CPU and GPU.

Differences in GPU and CPU architectures

CPU cores are designed to execute a single stream of sequential instructions with maximum performance, and GPUs are designed to quickly execute a very large number of parallel threads of instructions. This is the fundamental difference between GPUs and central ones. The CPU is a universal or general-purpose processor optimized to achieve high performance on a single instruction stream that processes both integers and floating-point numbers. At the same time, access to the memory with data and instructions occurs mainly randomly.

To increase CPU performance, they are designed to execute as many instructions as possible in parallel. For example, for this, in the processor cores, a block of extraordinary execution of commands is used, which allows reordering instructions not in the order of their arrival, which allows raising the level of parallelism of the instruction implementation at the level of one thread. Nevertheless, this still does not allow parallel execution of a large number of instructions, and the overhead of parallelizing instructions inside the processor core is very significant. That is why general-purpose processors do not have a very large number of execution units.

The graphics processor is fundamentally different. It was originally designed to execute a huge number of parallel threads of commands. Moreover, these command streams are parallelized initially, and there is simply no overhead for parallelizing instructions in the GPU. The graphics processor is designed to render images. In simple terms, at the input it takes a group of polygons, carries out all the necessary operations, and outputs pixels at the output. The processing of polygons and pixels is independent, they can be processed in parallel, separately from each other. Therefore, due to the initially parallel organization of work in the GPU, a large number of execution units are used, which are easy to download, as opposed to a sequential stream of instructions for the CPU.

GPUs and central processors also differ in terms of memory access. In the GPU, access to memory is easily predictable: if a texture Texel is read from memory, then after a while the time will come for neighbouring taxes. When recording, the same thing happens: if a pixel is written to the framebuffer, then after a few ticks the pixel located next to it will be recorded. Therefore, the GPU, unlike the CPU, simply does not need large cache memory, and textures require only a few kilobytes. The principle of working with memory is different for the GPU and CPU. So, all the modern GPU has multiple memory controllers, and indeed the graphics memory is faster, so the GPUs are much used for lower memory bandwidth, compared to universal processors, which is also very important for parallel calculations, operating with huge data streams.

The purpose processors used for most of the chip area occupied by the various buffer’s instructions and data, a decoding unit, a hardware branch prediction, reordering and block cache memory first, second and third levels. All these hardware units are needed to speed up the execution of a few command streams due to their parallelization at the processor core level.

The execution units themselves occupy relatively little space in the universal processor.

In the graphics processor, on the contrary, it is precisely the numerous execution units that occupy the main area, which allows it to simultaneously process several thousand command streams.

We can say that, unlike modern CPUs, GPUs are designed for parallel computing with a large number of arithmetic operations.

It is possible to use the computing power of graphic processors for non-graphic tasks, but only if the problem being solved allows parallelization of algorithms to hundreds of execution units available in the GPU. In particular, performing calculations on the GPU shows excellent results when the same sequence of mathematical operations is applied to a large amount of data. Moreover, the best results are achieved if the ratio of the number of arithmetic instructions to the number of memory accesses is large enough. This operation has less performance management requirements and does not require the use of capacious cache memory.

There are many examples of scientific calculations, where the advantage of the GPU over the CPU in terms of computing efficiency is undeniable. So, many scientific applications for molecular modeling, gas dynamics, fluid dynamics, and other things are perfectly adapted for GPU calculations.

So, if the algorithm for solving the problem can be parallelized into thousands of separate threads, then the efficiency of solving such a problem using the GPU can be higher than its solution using only a general-purpose processor. However, one cannot just take and transfer a solution to a problem from the CPU to the GPU, if only because the CPU and GPU use different commands. That is, when a program is written for a solution on the CPU, then a set of x86 instructions (or a set of instructions compatible with a specific processor architecture) is used, but for a GPU completely different instruction sets are used, which again take into account its architecture and capabilities. When developing modern 3D games, the DirectX and OrenGL APIs are used, allowing programmers to work with shaders and textures. Check Here Best Shared Hosting Server Companies 2020.